Hi all,

I have a visual mapping (shapes with text connected by arrows) of many variables that affect a particular context (with the various connections shown). At an online workshop, I wish the 15 or so participants to be able to indicate which variables they think most and which least significant (using a scale of 1-5 or colour scale). There are around 30 variables, and I hope all 15 participants will place a vote on every variable.

We shall then discuss the ones that score highest. My challenge is how to make this easy for participants who have not used Miro before?

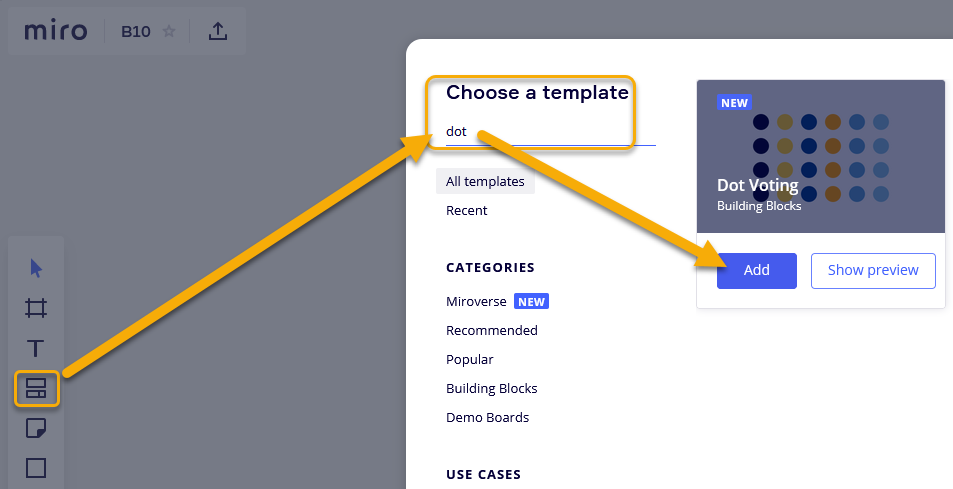

I thought about using coloured dots, but cannot find any! One can make dots using the circle shape, but I’m at a loss as to how to fix the size (visually it won’t work if the dots are different sizes)

I’m also considering using the number emojis, hoping people won’t re-size them manually. But as they are all grey, the visual impact is lower.

Any idea how to create a ‘dot’ with fixed size?

Or any better ideas how people can ‘rate’ the differing text boxes on a mind map?

Thanks